Julia 語言設計與 JIT 編譯器¶

Julia 為什麼快?¶

Julia Taiwan 發起人 杜岳華¶

2019.8.18¶

Outline¶

- 型別系統(type system)

- 多重分派(multiple dispatch)

- 泛型程式設計(generic programming)

- Metaprogramming

- Reflection and introspection

- JIT compiler

Introduction¶

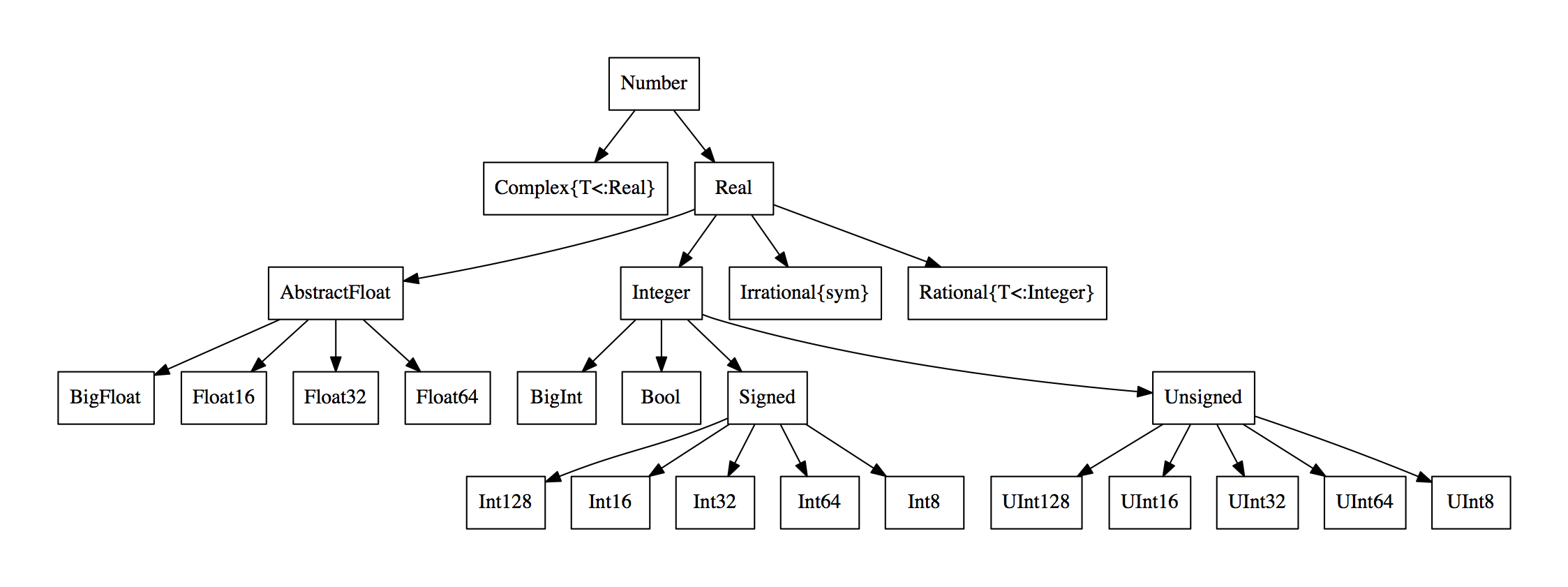

Type system¶

Type system¶

Type system¶

- Dynamic type, similar to symbolic programming

- Set-theoretic type

Multiple dispatch¶

Generic programming¶

- Parametric types and parametric methods

- Similar to multiple dispatch with parametric polymorphism.

- All types are first-class: can be dispatched and declared

Metaprogramming¶

- Macro

- Generated function

Macro¶

In [1]:

macro sayhello(name)

return :( println("Hello, ", $name) )

end

Out[1]:

@sayhello (macro with 1 method)

In [2]:

@sayhello "Andy"

Hello, Andy

Ref. official docs

Generated function¶

In [3]:

@generated function foo(x)

Core.println(x)

return :(x * x)

end

Out[3]:

foo (generic function with 1 method)

In [4]:

foo(5)

Int64

Out[4]:

25

In [5]:

foo("5")

String

Out[5]:

"55"

Ref. official docs

Compile time¶

Compiler overview¶

Compiler compile time¶

Compiler runtime¶

Compiler details¶

Compile time¶

How Julia compiler works?¶

Static Single Assignment form (SSA)¶

- Each variable is assigned exactly once.

- Every variable is defined before it used.

Simplify and improve the compiler optimization¶

- constant propagation

- value range propagation

- sparse conditional constant propagation

- dead code elimination

- global value numbering

- partial redundancy elimination

- strength reduction

- register allocation

Runtime¶

Runtime¶

Dispatch system¶

- Type-based dispatch system

- Dispatching a single tuple type of arguments

Functions¶

Julia functions are generic functions.

A generic function is a single function and consists of many methods.

The methods of a function is stored in a method table.

All objetcs in Julia are callable.

JIT compilation¶

Staged programming¶

Leverage the type in input of code and generate optimized code¶

@generated function foo(::Array{T,N}, ...) where {T,N}

...

x = Matrix{T}()

for i = 1:N

...

end

end

Hackable compiler¶

Python and Numba¶

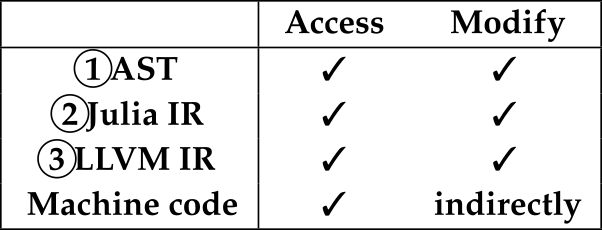

Reflection and introspection¶

In [6]:

foo(x, y) = x + y # Source code

Out[6]:

foo (generic function with 2 methods)

In [7]:

expr = :(foo(1, 2))

Out[7]:

:(foo(1, 2))

In [8]:

dump(expr) # AST

Expr

head: Symbol call

args: Array{Any}((3,))

1: Symbol foo

2: Int64 1

3: Int64 2

In [9]:

@code_lowered foo(1, 2) # Julia IR

Out[9]:

CodeInfo( 1 ─ %1 = x + y └── return %1 )

Reflection and introspection¶

In [10]:

@code_typed foo(1, 2) # Typed Julia IR

Out[10]:

CodeInfo( 1 ─ %1 = (Base.add_int)(x, y)::Int64 └── return %1 ) => Int64

In [11]:

@code_llvm foo(1, 2) # LLVM IR

; @ In[6]:1 within `foo'

define i64 @julia_foo_12964(i64, i64) {

top:

; ┌ @ int.jl:53 within `+'

%2 = add i64 %1, %0

; └

ret i64 %2

}

In [12]:

@code_native foo(1, 2) # Assembly

.text ; ┌ @ In[6]:1 within `foo' ; │┌ @ In[6]:1 within `+' leaq (%rdi,%rsi), %rax ; │└ retq nopw %cs:(%rax,%rax) ; └

Metaprogramming interfaces¶

CUDAnative.jl¶

Details in multiple dispatch¶

Object graph¶

Multiple dispatch¶

- Call

f(x, y) - Method table:

typeof(f).name.mt - Argument tuple:

Tuple{typeof(f), typeof(x), typeof(y)}

Dispatch: look up method in method table with argument tuple¶

Parameter information is in the name of function.¶

Ref. Function calls

Multiple dispatch¶

In [13]:

typeof(sum)

Out[13]:

typeof(sum)

In [14]:

typeof(sum).name

Out[14]:

typeof(sum)

In [15]:

typeof(typeof(sum).name)

Out[15]:

Core.TypeName

Multiple dispatch¶

In [16]:

typeof(typeof(sum).name.mt)

Out[16]:

Core.MethodTable

In [17]:

typeof(sum).name.mt

Out[17]:

13 methods for generic function sum:

- sum(x::Tuple{Any,Vararg{Any,N} where N}) in Base at tuple.jl:395

- sum(r::StepRangeLen) in Base at twiceprecision.jl:536

- sum(r::AbstractRange{#s72} where #s72<:Real) in Base at range.jl:970

- sum(x::SparseArrays.AbstractSparseArray{Tv,Ti,1} where Ti where Tv) in SparseArrays at /build/julia/src/julia-1.1.1/usr/share/julia/stdlib/v1.1/SparseArrays/src/sparsevector.jl:1306

- sum(a::AbstractArray{Bool,N} where N) in Base at reduce.jl:417

- sum(arr::AbstractArray{BigInt,N} where N) in Base.GMP at gmp.jl:547

- sum(arr::AbstractArray{BigFloat,N} where N) in Base.MPFR at mpfr.jl:724

- sum(a::AbstractArray; dims) in Base at reducedim.jl:648

- sum(::typeof(abs), x::SparseArrays.AbstractSparseArray{Tv,Ti,1} where Ti where Tv) in SparseArrays at /build/julia/src/julia-1.1.1/usr/share/julia/stdlib/v1.1/SparseArrays/src/sparsevector.jl:1331

- sum(::typeof(abs2), x::SparseArrays.AbstractSparseArray{Tv,Ti,1} where Ti where Tv) in SparseArrays at /build/julia/src/julia-1.1.1/usr/share/julia/stdlib/v1.1/SparseArrays/src/sparsevector.jl:1331

- sum(a) in Base at reduce.jl:416

- sum(f, a::AbstractArray; dims) in Base at reducedim.jl:649

- sum(f, a) in Base at reduce.jl:399

Method sorting¶

- A concrete type is assigned a unique integer identifier (hash consing).

- These integer identifiers are used to loop up methods efficiently in a hash table.

- Linear search

- Sorting methods by specificity.

Details of compilation stages¶

Compilation stages¶

- parsing -

parse: text -> Expr (femotolisp) - expand macro -

macroexpand - syntax desugaring

- statementize control flow

- resolve scopes

- generate IR (lower) -

@code_lowered - top-level eval, method sorting -

methods - type inference

- inlining, high-level optimizations -

@code_typed - LLVM IR generation -

@code_llvm - LLVM optimizer, native code generation -

@code_native